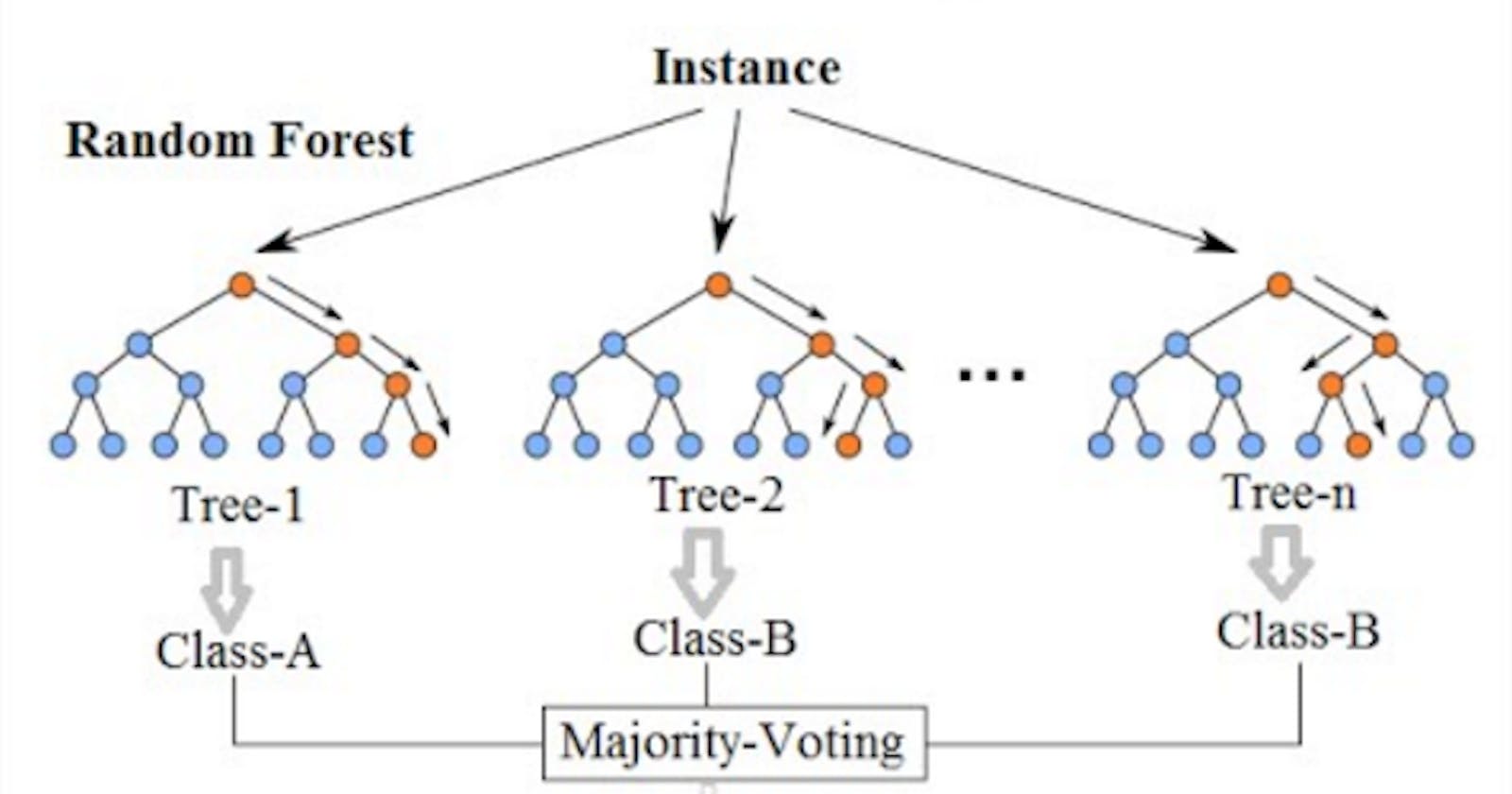

Random Forest is a popular algorithm in Machine Learning. Random forest is an improvement of the concept of bagging.

There is a fundamental difference between bagging and random forest.

Bagging trees use all features in the dataset to build each 🌴, whereas random forest selects random subsets of features that generate more diverse learners (classifiers).

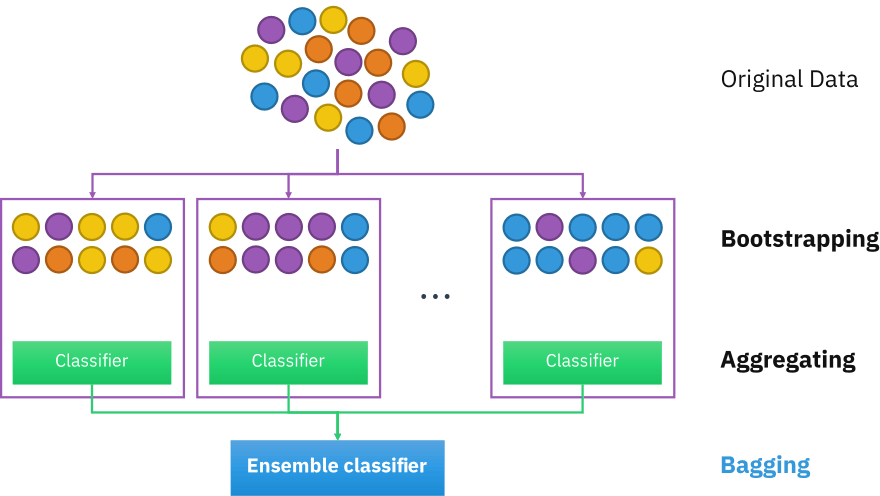

Bagging is also called Bootstrap aggregating.

During Bootstrap, a subset of dataset get selected, and different classifier get builds. During Aggregation: Predictions from the different classifiers get combined, and majority predictions get chosen.

We have multiple classifiers; each has been exposed to different data sections and is ready with expertise.

We build N trees each. So they have a slightly different set of information and also having overlapping knowledge.

Isn't it similar to how we make decisions at the organization or even individually?

Bagging is intuitive, having different exerts learning from diverse data points and bringing diversity in decision making. We bring people 🚶♂️ with diverse backgrounds to have their perspective towards the problem and combine expertise from all people, and we have a robust solution ✌.

Image Source: